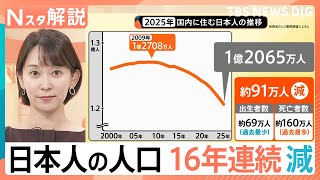

OSAKA, Jul 16 (News On Japan) - Sexual deepfakes, created using generative AI, are rapidly emerging as a new form of digital abuse, with cases increasing across Japan. Without their knowledge, individuals—especially minors—are finding their photos misused to produce sexually explicit images or videos, often in under a minute.

One disturbing trend involves photos of girls in school gym uniforms being digitally altered to depict nudity. In many instances, graduation album photos are exploited. "It looks like the clothes are stripped off," said one source familiar with how easily these AI tools operate.

Unlike earlier years when celebrities were mainly targeted, more recent victims include ordinary middle and high school students—and even elementary school children. According to a study by Hiiragi Net, which monitors digital abuse, there were 252 confirmed cases of sexual deepfakes involving minors between March and June this year. Many of the images were full-body nudes or manipulated to appear as though the children were engaging in sexual acts.

Graduation season in March and April appears to be a peak period for these abuses. The moment schools distribute albums, perpetrators begin uploading the photos to apps or websites where generative AI strips clothing and alters body features. Some photos are even shared on social media platforms with tags like "graduation album," and sections labeled “sotsu” (short for sotsugyō, or graduation) containing multiple AI-altered images.

In some cases, classmates themselves have requested nude edits of fellow students by submitting original photos to these platforms. The creation process is alarmingly easy—users simply upload a headshot, choose options like body size, click generate, and within about 30 seconds, a realistic fake nude image is produced.

One journalist who tested a service described being shocked at the realism: "If I hadn’t been told, I wouldn’t have realized the photo had been created by AI. It looked completely natural."

Hiiragi Net’s representative Nagamori warned that once images are created and shared online, individuals have little control. "Graduation photos or event pictures are often beyond personal management. In truth, full self-protection is no longer realistic. The government must implement proper countermeasures."

In response, some photo album publishers are beginning to change their practices. One Osaka-based company said it now considers omitting identifying details, or separating names from faces. However, security remains a major concern. In April, a printing company in Sendai reported a cyberattack that may have leaked the personal data of 173,000 students nationwide. The company says it now uses top-tier security systems to prevent recurrence.

"We take pride in creating graduation albums as a cherished part of student life. But once the product leaves our hands, we have no control," said a representative of the company.

Beyond graduation albums, other photos have also been targeted. In some cases, ID and password leaks from nursery school photo sites led to images being taken and manipulated. Even wedding photos shared on social media have reportedly been turned into deepfakes.

Concerns about regulation are mounting. While Japan currently lacks laws specifically targeting sexual deepfakes, some local governments are taking action. Tottori Prefecture introduced an ordinance in April banning the creation and distribution of child pornography using generative AI, with penalties of up to 50,000 yen and mandatory deletion orders. From next month, those who fail to comply could face further fines or public name disclosure.

Experts note, however, that national legislation is still absent, and global enforcement is complicated—particularly when the offenders or hosting services are overseas. In contrast, the European Union is moving to regulate AI more broadly, including imposing penalties on companies that produce tools used in human rights violations.

"In Japan, regulation must be implemented urgently before the harm spreads further," said Nagamori. "This is a terrifying new era where any child—or adult—could have their image stolen, stripped, and turned into a deepfake in seconds."

Source: KTV NEWS