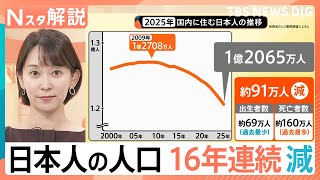

Aug 08 (News On Japan) - The spread of AI-generated sexual deepfakes—images and videos in which individuals, including children, are falsely depicted in explicit content—has become a growing societal concern in Japan. While celebrities have long been targeted, there is now a disturbing rise in the use of school graduation photos and other images of minors to create sexualized deepfake content that is then uploaded to social media platforms.

At a June expert panel meeting, Japan's Children and Families Agency began discussing possible regulations. In response, Tottori Prefecture implemented a new ordinance on August 4th that includes administrative penalties for creating or distributing such content.

Where are these sexual deepfakes being generated? In many cases, they originate from websites that charge users to create fake nude images using AI. When one such site was tested by a program, an image of a fully clothed fictional woman was uploaded. The site returned a convincingly realistic fake nude image, with body parts artificially generated as though the woman had actually removed her clothing.

On social media and anonymous forums, some users advertise services where they accept images, process them into fake nudes, and demand payment via digital transactions. Although journalists attempted to contact several such accounts, none responded before the specified deadline.

Notably, generative AI models like ChatGPT cannot produce such content. Stability AI, the UK company behind image generation platform Stable Diffusion, updated its terms of service on July 31st to explicitly ban sexually explicit content. While many AI developers have implemented safeguards, fake nudes can still be easily created through specialized websites.

But are such services operating legally? According to attorney Hajime Ideta, who specializes in generative AI law, there is currently no law in Japan that directly prohibits the creation of deepfakes. However, depending on how the content is generated, it could potentially violate existing laws related to copyright, defamation, or the right of publicity.

For instance, using someone's likeness to create deepfake pornography may infringe on copyright or personality rights. If distributed online, the content could damage a person’s reputation and expose creators to legal consequences. In these cases, those who create and share such content may be held accountable.

Regarding the websites offering nude editing services, Ideta explains that if such platforms are merely tools for users to manipulate images on their own, the operators may avoid direct legal responsibility. However, if these operators actively promote or encourage the creation of illegal content—particularly involving minors—they could be seen as complicit and thus liable under the law.

The same applies to individuals acting as intermediaries: if a person requests a fake nude and the subject is a minor, both the requester and the service provider may be subject to prosecution under Japan’s laws prohibiting child pornography. Even if the subject is an adult, processing and using someone’s image without consent may constitute copyright infringement, a violation of moral rights, or breach of privacy.

Ideta emphasizes three pillars for addressing the issue: law, technology, and ethics. Legally, he points to the example of the United States, South Korea, and China, where regulations targeting deepfake pornography are already being developed. He urges Japan to follow suit.

From a technological standpoint, tools must be developed and made widely available to help victims detect and combat such content. Platforms must also adopt stronger detection systems to prevent harm.

Lastly, he highlights the importance of ethical norms and education. As deepfake technology becomes more accessible to younger generations, teaching responsible use of generative tools is vital to prevent abuse.

In Tottori Prefecture, a new ordinance went into effect on August 4th that explicitly defines AI-manipulated images of real youth as child pornography. Those who create or share such images may be fined up to 50,000 yen. Failure to comply with takedown orders could result in public disclosure of names and additional penalties.

Commentators praised the move, noting that children must be shielded from the devastating effects of becoming unwilling subjects in explicit deepfakes. Internationally, cases of suicide among minors have been linked to such abuses. With social media enabling widespread dissemination, the harm is often irreversible.

While Japan's Ministry of Justice has acknowledged that AI-generated sexual deepfakes involving real children could fall under existing child pornography laws, enforcement often relies on other statutes such as defamation. Many legal experts argue that this patchwork approach is insufficient.

They stress the need for a dedicated law that directly addresses deepfake pornography, particularly when it involves actual individuals—especially minors. Such a law, they argue, would send a strong societal message and serve as a deterrent.

Currently, operators of deepfake services often shield themselves by including disclaimers in their terms of use stating that child pornography creation is prohibited. But critics argue that merely stating so is not enough if the services are knowingly enabling illegal use.

The business model itself, which profits from potentially harmful use cases, has drawn sharp criticism. Though the technology may not be illegal in and of itself, the moral responsibility of developers and service providers is being questioned.

There is also concern about government overreach if content creation tools are heavily regulated. Some fear that attempts to control generative content could lead to censorship. However, others argue that when the harm—especially involving minors—is so severe, society must weigh the risks and prioritize victim protection.

Experts agree that it is time to move swiftly. Without clear laws, victims may continue to suffer while perpetrators remain unpunished. For real individuals—especially children—being falsely depicted in explicit content can inflict lifelong trauma.

As technology evolves, legal and ethical frameworks must keep pace. What is urgently needed, many say, is a firm stance that protects vulnerable individuals while promoting the responsible use of AI.

Source: ABEMA